Your Search Console Has 10x More Content Ideas Than You Think: The 30-Day Ranking Framework

Most teams export their Search Console data into a spreadsheet, scan the top 20 keywords, and call it a day. That CSV sitting in your Downloads folder contains the raw material for a content engine that compounds every month — you just need a system to extract it.

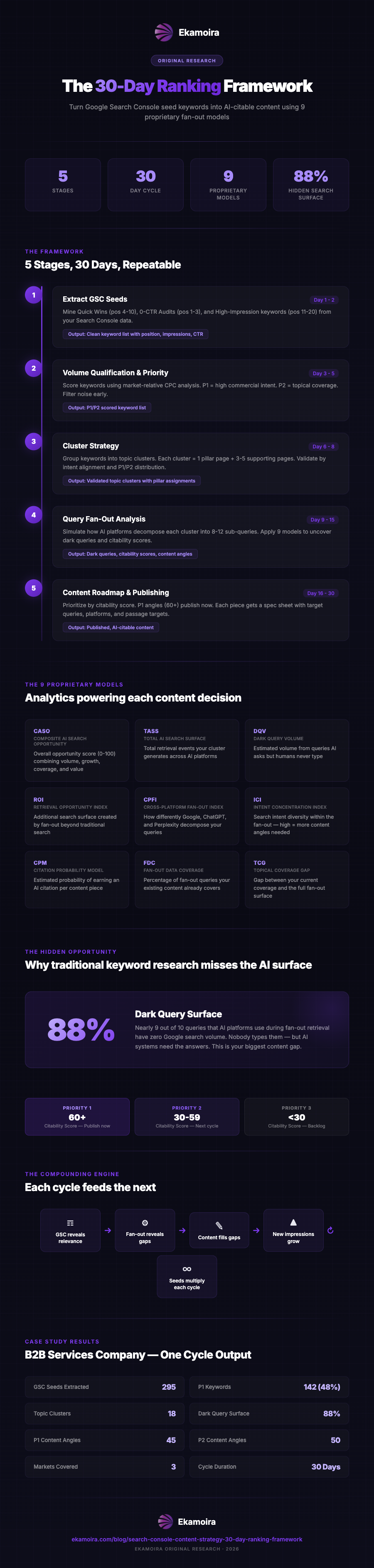

This article walks through the exact 5-stage framework we use to turn GSC seed keywords into published, AI-citable content in 30-day cycles. No theory. No "it depends." A repeatable process with measurable outputs at every stage.

The framework is built on query fan-out research showing that AI search platforms decompose every user query into 8-12 sub-queries before generating an answer. Your GSC data contains the seeds. The fan-out reveals the actual content surface you need to cover.

The 30-Day Ranking Cycle

The framework runs in 5 stages. Each stage has a defined input, a defined output, and a clear handoff to the next. One full cycle takes 30 days from GSC extraction to published content.

Stage 1: Extract GSC Seeds (Day 1-2) Stage 2: Volume Qualification & Priority Scoring (Day 3-5) Stage 3: Cluster Strategy (Day 6-8) Stage 4: Query Fan-Out Analysis (Day 9-15) Stage 5: Content Roadmap & Publishing (Day 16-30)

The compounding effect: every cycle produces content that generates new GSC impressions, which become seeds for the next cycle. After three cycles, the system feeds itself.

Stage 1: Extract GSC Seeds

Your Search Console already tells you what Google thinks your site is relevant for. The trick is knowing which signals to extract and which to ignore.

The Three GSC Extraction Buckets

Quick Wins (Position 4-10, 100+ impressions)

These are keywords where you already rank on page one but haven't earned the click. A title or meta description change can move these to position 1-3 within days. They're also your highest-confidence fan-out seeds because Google has already validated your relevance.

0-CTR Audits (Position 1-3, 0% CTR)

You rank at the top and nobody clicks. This usually means one of three things: the AI Overview has absorbed the answer, your title doesn't match the search intent, or a rich result is stealing the click. Each scenario requires a different fix, and all three create fan-out opportunities.

Position 11-20 with High Impressions

Google shows your page for these queries but doesn't rank you on page one. These keywords reveal adjacent topic areas where you have authority but lack dedicated content. They're the best candidates for new pages.

What to Ignore

Keywords below position 30 with under 50 impressions per month. Keywords where your brand name is the query. Keywords for pages you plan to deprecate. Filtering these out early prevents noise from polluting the later stages.

Extraction Output

A clean keyword list with: query, position, impressions, clicks, CTR, and the landing page URL. This becomes the input for Stage 2.

Stage 2: Volume Qualification & Priority Scoring

Raw GSC data tells you what Google thinks you're relevant for. Volume qualification tells you which of those keywords represent real market opportunity.

Market-Relative CPC Analysis

Not all keywords with search volume deserve content. A keyword with 1,000 monthly searches and a $0.05 CPC signals informational intent with low commercial value. The same volume at $8.50 CPC signals purchase intent. We score keywords using market-relative CPC — the keyword's CPC compared to the median CPC in that vertical.

P1/P2 Scoring

Every keyword gets a priority score:

P1 (Priority 1) — High commercial intent, market-relative CPC above 75th percentile, and search volume above the vertical median. These become primary content targets.

P2 (Priority 2) — Moderate intent or volume. Valuable for topical coverage but not lead-generating on their own. These fill out cluster content.

Anonymized Example: B2B Services Company

We ran this framework for a B2B services company operating across three markets (DACH, US, Southern Europe). Starting from their GSC data:

- 295 keywords extracted from GSC after filtering

- 142 P1 keywords (48%) with above-median CPC

- 153 P2 keywords (52%) for topical coverage

- 3 markets requiring language-specific analysis

The P1/P2 split matters because it determines how you allocate content resources in Stage 5. P1 keywords drive revenue. P2 keywords drive topical authority that lifts P1 rankings.

Stage 3: Cluster Strategy

Individual keywords don't win in AI search. Topic clusters do.

Our fan-out research found that sites with 80%+ topical coverage retain 85.4% of AI visibility despite 73% fan-out query instability. The implication: you need clusters, not pages.

From Keywords to Clusters

Take the 295 qualified keywords from Stage 2. Group them by semantic similarity and service line. The B2B services company's keywords clustered into 18 distinct topic groups:

- 4 clusters around core service offerings (45 keywords)

- 6 clusters around use-case scenarios (92 keywords)

- 5 clusters around comparison/evaluation queries (88 keywords)

- 3 clusters around implementation/how-to queries (70 keywords)

Each cluster becomes a content silo: one pillar page (targeting the P1 head term) supported by 3-5 supporting pages (targeting P2 long-tail terms within the cluster).

Cluster Validation

A cluster is valid when it meets three criteria:

- At least one P1 keyword with commercial intent

- Three or more P2 keywords providing topical breadth

- Clear intent alignment — all keywords in the cluster answer variations of the same underlying question

Clusters that fail validation get merged into adjacent clusters or deprioritized.

Stage 4: Query Fan-Out Analysis

This is where the framework diverges from traditional keyword research. Instead of stopping at search volume and competition, we simulate how AI search platforms will decompose these clusters into sub-queries.

Why Fan-Out Matters for Content Strategy

When someone asks Google AI Mode "best project management tools for remote teams," the system doesn't just retrieve pages ranking for that phrase. It fires 8-12 sub-queries:

- "project management tool pricing comparison 2026"

- "remote team collaboration features"

- "project management security compliance"

- "user reviews project management software"

- "implementation timeline project management"

Each sub-query retrieves different content. If your content only answers the head term, you're invisible to 8-11 of those retrieval events. This is the 88% visibility gap identified in our research.

The 9 Proprietary Models

We apply 9 analytical models to each cluster's fan-out data. The full methodology is documented in our original research, but here's what each model contributes to content strategy:

| Model | What It Tells You |

|---|---|

| CASO (Composite AI Search Opportunity) | Overall opportunity score (0-100) combining volume, growth, coverage, and value |

| TASS/FME (Total AI Search Surface) | How many total retrieval events your cluster generates across AI platforms |

| DQV (Dark Query Volume) | Estimated volume from queries AI platforms ask but humans don't type — your biggest content gaps |

| ROI (Retrieval Opportunity Index) | How much additional surface the fan-out creates beyond traditional search |

| CPFI (Cross-Platform Fan-Out Index) | Whether Google, ChatGPT, and Perplexity fan out differently for your cluster |

| ICI (Intent Concentration Index) | How diverse the search intent is within the fan-out — high diversity means you need more content angles |

| CPM (Citation Probability Model) | Your estimated probability of earning an AI citation per content piece |

| FDC (Fan-Out Data Coverage) | What percentage of fan-out queries your existing content already covers |

| TCG (Topical Coverage Gap) | The gap between your current coverage and the full fan-out surface |

Dark Queries: The Hidden Content Surface

The most valuable output of Stage 4 is the dark query list. These are questions AI platforms ask during fan-out that have zero Google search volume. Nobody types them, but AI systems need the answers.

For the B2B services company, fan-out analysis revealed:

- 88% dark query surface — nearly 9 out of 10 fan-out queries had no measurable search volume

- 45 P1 content angles — high-citability dark queries mapped to commercial clusters

- 50 P2 content angles — supporting dark queries that build topical coverage

Dark queries are the reason traditional keyword research misses 88% of AI citation opportunities. Your GSC seeds are the starting point, but the fan-out reveals the actual surface.

Citability Scoring

Not all dark queries are equal. We score each by citability (0-100), which predicts how likely content answering that query would earn an AI citation. The score combines:

- Platform weight (0-40): Which AI platforms generate this sub-query

- Intent weight (0-40): Evaluative intent scores highest (AI cites comparison content), navigational scores lowest

- Topicality bonus (0-20): How closely the query relates to your cluster's core topic

Content angles with 60+ citability scores go into Priority 1. These are the pages you publish first.

Stage 5: Content Roadmap & Publishing

Stages 1-4 produce the what. Stage 5 produces the when and how.

From Clusters to Content Calendar

Each cluster's content angles are ordered by citability score:

- Priority 1 (Citability 60+): Publish in the current 30-day cycle

- Priority 2 (Citability 30-59): Schedule for the next cycle

- Priority 3 (Citability <30): Backlog — revisit after two cycles

For the B2B services company, this produced:

| Priority | Content Angles | Publication Timeline |

|---|---|---|

| P1 | 45 articles | Current cycle (Days 16-30) |

| P2 | 50 articles | Next cycle |

| P3 | Backlog | Re-evaluate after Cycle 2 |

Content Specifications

Each content angle gets a spec sheet generated from the fan-out data:

- Target queries — the dark queries this content should answer

- Target platforms — which AI platforms generate these sub-queries (Google, ChatGPT, Perplexity)

- Intent type — informational, evaluative, transactional, or navigational

- Citability score — the expected AI citation probability

- Passage targets — specific 134-167 word self-contained passages the content must include (this length range achieves the highest AI citation rates per research by Wellows)

- Internal link targets — which existing pages in the cluster to link from

The Compounding Effect

Here's why 30-day cycles matter: every published page generates new GSC impressions within 2-3 weeks. Those impressions become seeds for the next cycle. After three cycles:

- Cycle 1: 295 GSC seeds → 18 clusters → 45 P1 articles published

- Cycle 2: 450+ GSC seeds (original + new impressions) → expanded clusters → 50+ articles

- Cycle 3: 700+ GSC seeds → new clusters emerge → the system self-sustains

Each cycle is faster than the last because existing content already covers part of the fan-out surface. The FDC (Fan-Out Data Coverage) model tracks this: it tells you what percentage of fan-out queries your published content now answers.

Results: What This Framework Produces

Running the full 5-stage framework for the B2B services company produced:

| Metric | Output |

|---|---|

| GSC seeds extracted | 295 keywords |

| P1 keywords | 142 (48%) |

| P2 keywords | 153 (52%) |

| Topic clusters | 18 |

| Dark query surface | 88% |

| P1 content angles | 45 |

| P2 content angles | 50 |

| Markets covered | 3 (DACH, US, Southern Europe) |

The framework isn't theoretical. Every number comes from running real GSC data through the 9 models. The 30-day cycle means you measure results in weeks, not quarters.

The Reward-Based Organic Model

Traditional content strategy is linear: research keywords, write content, hope it ranks. This framework is cyclical and self-reinforcing:

- GSC data reveals what Google already considers you relevant for

- Fan-out analysis reveals what AI platforms need that nobody is providing

- Published content generates new GSC impressions

- New impressions become seeds for the next cycle

- Each cycle compounds because existing content already covers part of the surface

After three cycles, the content engine runs itself. Your GSC data grows, your fan-out coverage grows, your AI citations grow — and each feeds the other.

Next Steps

If you're sitting on GSC data and publishing content based on search volume alone, you're leaving 88% of AI citation opportunities on the table.

Two ways to apply this framework:

Read the research. Our original query fan-out research documents the full methodology behind the 9 models, including the data from 173,902 URLs showing why fan-out coverage drives AI citations.

Get the analysis done for you. We run this framework as a done-for-you service — your GSC data, our models, published content in 30 days. Book a call to see if it fits.

For agencies and consultancies looking to offer this to clients, see our partnership program.

About the Author

Co-founder of Ekamoira. Building AI-powered SEO tools to help brands achieve visibility in the age of generative search.

of brands invisible in AI

Our proprietary Query Fan-Out Formula predicts exactly which content AI will cite. Get visible in your topic cluster within 30 days.

Free 15-min strategy session · No commitment

Related Articles

AI SEO Copywriting: Write Content That Ranks in Google AND AI Answers

AI SEO copywriting is the practice of creating content that ranks in traditional search engines while also earning citations in AI-generated answers from platfo...